AI “Therapy” at Sea: When Chatbots Help (and When They Can Make Things Worse)

- IMEQ CENTER

- 2 days ago

- 4 min read

You’re on board, it’s late, and you can’t sleep. You don’t want to worry your family. You don’t want to “make a big deal” onboard. So you open an AI chatbot and type: “I feel stressed. What’s wrong with me?”

This is becoming a real trend—and psychologists in the maritime space are already warning about the risks of using AI as mental health support, especially when someone is vulnerable.

AI can be useful in small, practical ways. But it can also be unsafe if you use it like a therapist, or if you share private details without thinking. The goal of this article is simple: help you use AI safely, and protect your mental health onboard.

Why AI feels so helpful when you’re at sea

AI is:

Available 24/7

Non-judgmental

Fast

Private (it feels private)

And when you’re tired, isolated, or under pressure, the brain naturally looks for quick relief.

But here’s the psychological catch:

In hard moments, we don’t need “nice words.” We need safe help.

Some chatbots are designed to agree, comfort, and keep you talking. That can feel good short-term, but it may miss risk, reinforce unhealthy thinking, or make you depend on it emotionally.

The big risks (in simple terms)

1) Privacy risk: you may share too much

If you type personal information (names, vessel details, medical info, photos), you may lose control of where it goes. Many tools are not health-care services and don’t follow medical privacy standards.

2) Wrong advice risk: confident doesn’t mean correct

AI can sound sure even when it’s wrong. That’s dangerous with sleep, panic, depression, medication, alcohol use, or trauma.

The APA (American Psychological Association) has warned that many AI chatbots and “wellness” apps don’t have enough evidence or regulation to keep users safe.

3) Crisis risk: AI is not for emergencies

If someone is in crisis (self-harm thoughts, feeling unsafe, severe panic, psychosis), AI is not the right support.

Maritime industry guidance specifically points seafarers to trained help like SeafarerHelp for urgent emotional support.

So… should seafarers use AI at all?

Yes—as a tool, not as a therapist.

Think of AI like a basic gym app:

It can guide a routine.

It can’t diagnose your injury.

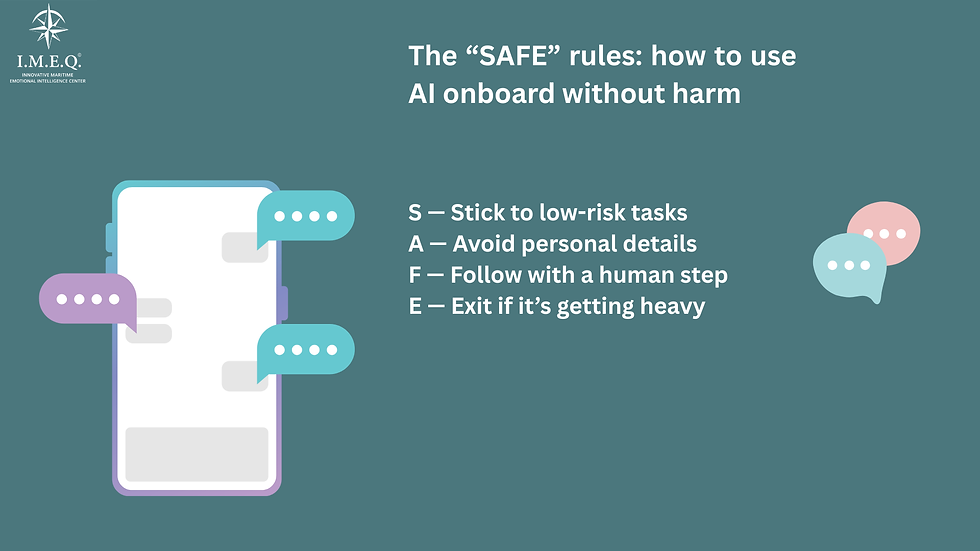

The “SAFE” rules: how to use AI onboard without harm

S — Stick to low-risk tasks

Use AI for:

journaling prompts (“Help me write what I’m feeling in 5 lines.”)

simple breathing steps

planning a healthy routine (sleep / exercise / food)

communication help (how to say something calmly to a crewmate)

learning basics (what is anxiety, what is burnout—general info)

Avoid using AI for:

diagnosis (“Do I have depression?”)

medication advice

trauma processing (“Tell me what my childhood means…”)

relationship “decisions” in high emotion (“Should I divorce?”)

anything that replaces real support

(If you use it, keep it practical and short.)

A — Avoid personal details

Do not type:

your full name, crew names, vessel name, company details

medical records

private photos

passwords, codes, or documents

If you need help, write like this instead:

“A person working long shifts at sea is feeling stressed and can’t sleep. What are 3 safe steps they can try tonight?”

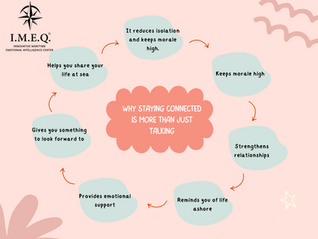

F — Follow with a human step

After AI gives you suggestions, do one human action:

talk to a trusted crewmate

tell your officer you’re not sleeping well

contact your company support (if available)

contact a seafarer helpline if you need emotional support

ISWAN’s SeafarerHelp exists for this: free, confidential, multilingual, 24/7.

E — Exit if it’s getting heavy

Stop using AI and get real help if you notice:

you’re using it for hours

you feel worse after chatting

you start believing “only the bot understands me”

you’re hiding how bad things are

you have thoughts of self-harm or feel unsafe

Recent real-world cases and investigations have increased scrutiny about chatbot safety in vulnerable situations. Don’t gamble with your wellbeing.

A 7-minute “Mental Reset” you can do after your shift (with or without AI)

Minute 1: Name itSay (to yourself): “I’m stressed / lonely / angry / tired.”Naming feelings reduces pressure.

Minute 2–3: Breathe (simple)Inhale 4 seconds → hold 2 → exhale 6. Repeat 5 times.

Minute 4: Body checkDrink water. Stretch neck/shoulders.(When the body calms, the mind follows.)

Minute 5: One small controlPick ONE:

tidy your bunk area for 2 minutes

prepare tomorrow’s socks/uniform

write a 3-line plan for tomorrow

Minute 6–7: ConnectionSend one message to someone safe, even short:

“Just finished my shift. Tired today. Hope you’re okay.”

If you want to use AI here, use it only like this:

“Give me a short message I can send home that sounds warm but simple.”

Quick checklist: “Am I using AI in a healthy way?”

I’m using it for skills, not therapy.

I’m not sharing private details.

I stop if I feel worse or dependent.

If things feel serious, I contact a human support option (company channels / SeafarerHelp / professional support).

Comments